In advance of her keynote address—“The Power of Human Connection & Empathy in the Age of AI”—at IBTM World, Nov. 18-20 in Barcelona, Nathalie Nahai joins us to discuss how when in the right hands and with the right intentions, AI can be used for good.

What do you hope the audience takes away from your IBTM World keynote?

The lesson I hope to convey is that, despite the hype and the risks, we have enormous collective power and agency to shape the trajectory of the world to come. While the most prominent AI players would have us believe in the fundamental inevitability of an AI-dominated world (they have profits to make, after all), the truth is that the future is not yet written.

Rather than surrender to overwhelm and blindly adopt imperfect tools that may actually be eroding our privacy and losing companies money, we must test these technologies’ capabilities and examine the blind-spots and mounting issues so that we can better overcome them. We need to critically assess whether the costs we are already seeing are bringing us closer to a more vibrant, regenerative future, or if we are cannibalizing the very life support of the planet that sustains us.

The choice before us is stark: Do we really want to live in a world in which AI slop floods our media and news channels, where children lose hours of sleep and social time glued to increasingly persuasive devices and agentic workforces lay waste to human economies? Where data centers syphon off diminishing freshwater supplies, their smog-forming nitrogen oxide outputs contaminating entire communities? Do we want really want to pour our precious resources and human labor into the infrastructures rapaciously draining power grids at the very moment that we’re bursting through irreversible climate tipping points?

I refuse to believe that this dystopian dream is the best we can do.

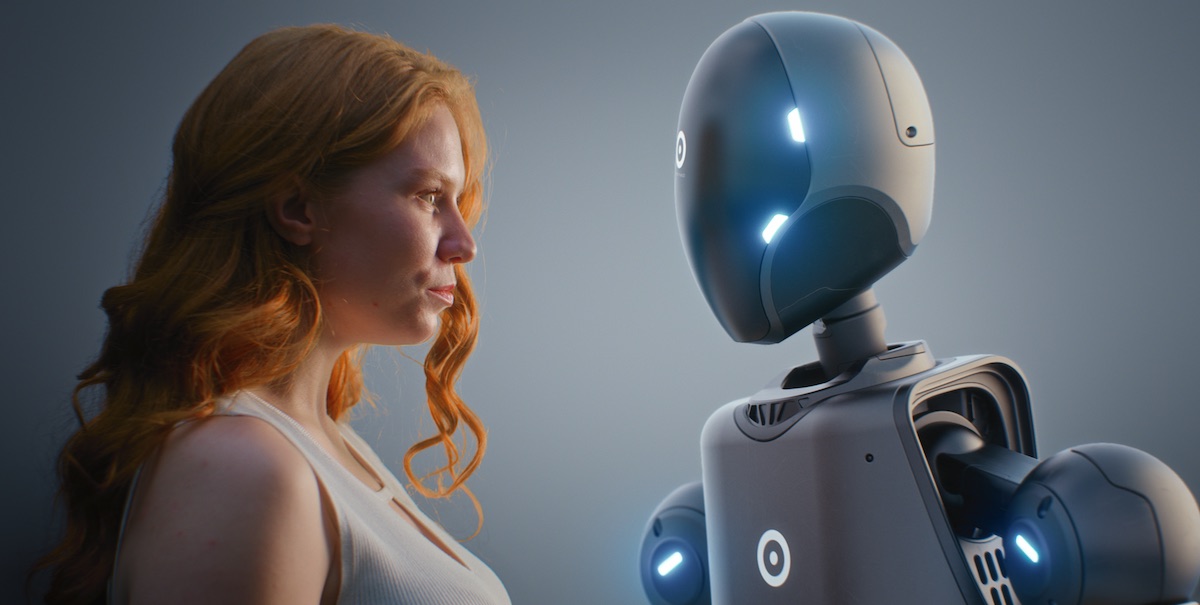

As AI extends to us the (fallible) power of gods, we must remember what it is that makes life worth living: one another. And it is from this place that we must ask ourselves, how we might use our technologies to serve and protect all that we love?

How, if at all, has the power or perception of empathy changed in recent years?

To answer this question, let’s define what human empathy is. Simply put, it’s our ability to identify with or understand another’s situation or feelings. In psychological terms, there are three distinct kinds of empathy:

- Cognitive - perspective taking, transposing ourselves into fictional people or places.

- Emotional - identifying and responding to someone’s state with appropriate emotion.

- Somatic - the physical echo we experience within our autonomic nervous system in response to someone else’s pleasure or pain.

Given the complexity and richness of human empathy, you’d be forgiven for assuming it would be hard to replicate or hack. And yet, one of the most disturbing shifts in our perception of empathy relates to the recent proliferation and widespread adoption of largely untested, unregulated AI chatbots.

Whether explicitly marketed as AI companions or not, products such as ChatGPT4 and 5 (known for their sycophancy) are prime examples of tools that have been carefully designed to perform empathy in a bid to boost adoption and hype around what are, at their core, flawed applications.

At first glance, the side effects may seem innocuous enough (e.g. shifts in language use and the homogenization of tone), but for some people, it can mean the erosion of relationships, well-being and even cognitive capacities. As unscrupulous companies chase shareholder value above all else, their drive to maximize engagement by exploiting our needs for belonging, companionship and understanding is already exacting a hefty price on many. And as we increasingly turn to chatbots to fulfil social and therapeutic functions we might previously have sought out from other people, the very concept of what empathy is, can start to unravel.

Far from the messy, gritty, poignant kind you might find in a trusted friend, the performative “empathy” on offer from chatbots is the slippery, feckless, frictionless sort that would acquiesce to every demand, backtracking in a bid to appease and keep you talking—and it’s by design. Not only are these anthropomorphic, bias-confirming tools always on (ready to “listen” at 3 a.m. as you battle with your existential dread), they are also carefully calibrated to engender intimacy, making a parasocial play for our attention. This might sound absurd, fantastical even, if it weren’t already happening in real time.

Amongst a growing litany of complaints levied against unregulated chatbots, reports of their role in the breakdown of relationships and the violation of mental health standards sit alongside heartbreaking cases of delusions, psychosis and teen suicide. These painful, life-altering consequences carry profound moral and societal implications, not only for those directly afflicted, but for all of us. And with the usage of generative AI tools appearing finally to lose traction, it may be that we’re starting to course correct, granting us a much needed reprieve in which to focus on the things that really matter (hint: it’s not drowning your colleagues in AI “workslop” or killing off social media with fake videos).

Is the growing use of AI impacting how we engage with one another? Are there ways in which you’re seeing people use AI that are actually detrimental to developing or maintaining genuine human relationships?

Yes, in multiple directions. As public-facing AI becomes increasingly embedded in daily life (from standalone chatbots to the filters you find on social media), it appears to be impacting many aspects of how we engage not only with one another, but also with ourselves. Whether shaping how we speak or how we think about our body image, for example, without appropriate regulations and guardrails, AI tools can have lasting, deleterious impacts on how we relate to the world.

From synthetic relationships and artificial intimacy eroding our capacity or willingness to tolerate friction in human relationships to the impacts of keeping our deceased loved ones “alive” through digital doppelgangers, there are all kinds of ways that AI-enabled platforms could shape how we live, love and grieve. And if, as OpenAI recently reported, AI hallucinations are mathematically inevitable (not simply engineering flaws), then it looks like many of the challenges we face today are not likely to disappear any time soon.

And yet it doesn’t have to be this way.

Deployed thoughtfully, there are extraordinary examples of AI being used to bring people into closer kinship with the wider web of life, from the Earth Species Project seeking to decode animal communication “to illuminate the diverse intelligences on Earth” to solar-powered acoustic monitoring devices helping communities prevent the illegal logging of the Ecuadorian forest.

AI tools also show potential in helping us to secure more reliable, consistent solar energy and, in the world of medicine, AI-powered technologies are showing promise in healthcare, from enhancing surgery with robotics and AI guidance, to the development of personalized vaccines for cancer. Perhaps most encouraging is the rise of pioneers like Priya Donti (co-founder of global non-profit Climate Change AI) who is blazing trails in the movement to harness technology in service to the regeneration of life, finding novel, impactful interventions to mitigate the climate crisis. Whatever future we can dream of, in the right hands (and with the right intentions), artificial intelligence can serve as a phenomenal force for good.